Scientific research is based on the relationship between the reality of nature, as it is observed, and a representation of this reality, formulated by a theory in mathematical language. If all the consequences of the theory are experimentally proven, it is considered as validated. This approach, which has been used for nearly four centuries, has built a consistent body of knowledge. But these advances have been made thanks to the intelligence of human beings who, despite all, can still hold onto their preexisting beliefs and biases. This can affect the progress of science, even for the greatest minds.

The first mistake

In Enstein’s master work of general relativity, he wrote the equation describing the evolution of the universe over time. The solution to this equation shows that the universe is unstable, not a huge sphere with constant volume with stars sliding around, as was believed at the time.

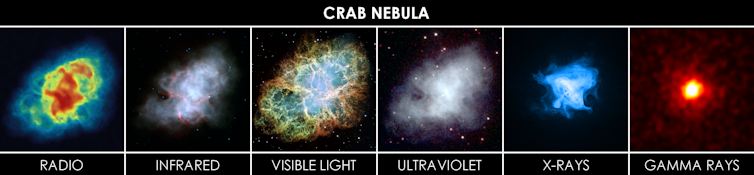

At the beginning of the 20th century, people lived with the well-established idea of a static universe where the motion of stars never varies. This is probably due to Aristotle’s teachings, stating that the sky is immutable, unlike Earth, which is perishable. This idea caused a historical anomaly: in 1054, the Chinese noticed the appearance of a new light in the sky, but no European document mentions it. Yet it could be seen in full daylight and lasted for several weeks. It was a supernova, that is, a dying star, the remnants of which can still be seen as the Crab Nebula. Predominant thought in Europe prevented people from accepting a phenomenon that so utterly contradicted the idea of an unchanging sky. A supernova is a very rare event, which can only be observed by the naked eye once a century. The most recent one dates back to 1987. So Aristotle was almost right in thinking that the sky was unchanging – on the scale of a human life at least.

The Crab nebula, observed today at different wavelengths, was not recorded by Europeans when it appeared in 1054. Torres997/Wikimedia, Radio: NRAO/AUI and M. Bietenholz, J.M. Uson, T.J. Cornwell; Infrared: NASA/JPL-Caltech/R. Gehrz, University of Minnesota; Visible light: NASA, ESA, J. Hester and A. Loll, Arizona State University; Ultraviolet: NASA/Swift/E. Hoversten, PSU; X-rays: NASA/CXC/SAO/F.Seward and collaborators; Gamma rays: NASA/DOE/Fermi LAT/R. Buehler, CC BY-SA

To remain in accordance with the idea of a static universe, Einstein introduced a cosmological constant into his equations, which froze the state of the universe. His intuition led him astray: in 1929, when Hubble demonstrated that the universe is expanding, Einstein admitted that he had made “his biggest mistake”.

Quantum randomness

Quantum mechanics developed around the same time as relativity. It describes the physics at the infinitely small scale. Einstein contributed greatly to the field in 1905, by interpreting the photoelectric effect as being a collision between electrons and photons – that is, infinitesimal particles carrying pure energy. In other words, light, which has traditionally been described as a wave, behaves like a stream of particles. It was this step forward, not the theory of relativity, that earned Einstein the Nobel Prize in 1921.

But despite this vital contribution, he remained stubborn in rejecting the key lesson of quantum mechanics – that the world of particles is not bound by the strict determinism of classical physics. The quantum world is probabilistic. We only know how to predict the probability of an occurrence among a range of possibilities.

In Einstein’s blindness, once again we can see the influence of Greek philosophy. Plato taught that thought should remain ideal, free from the contingencies of reality – a noble idea, but one that does not follow the precepts of science. Knowledge demands perfect consistency with all predicted facts, whereas belief is based on likelyhood, produced by partial observations. Einstein himself was convinced that pure thought was capable of fully capturing reality, but quantum randomness contradicts this hypothesis.

In practice, this randomness is not a pure noise, as it is constrained by Heisenberg’s uncertainty principle. This principle imposes collective determinism on groups of particles – an electron is free by itself, as we do not know how to calculate its trajectory when leaving a hole, but a million electrons draw a diffraction figure, showing dark and light fringes that we do know how to calculate.

Result of a Young interference experiment: the pattern is formed bit by bit with the arrival of electrons (8 electrons on photo a, 270 electrons on photo b, 2,000 on photo c, and 60,000 on photo d) that eventually form vertical fringes called interference fringes. Dr. Tonomura/Wikimedia, CC BY-SA

Einstein did not accept this fundamental indeterminism, as summed up by his provocative verdict: “God does not play dice with the universe.” He imagined the existence of hidden variables, i.e., yet-to-be-discovered numbers beyond mass, charge and spin that physicists use to describe particles. But the experiment did not support this idea. It is undeniable that a reality exists that transcends our understanding – we cannot know everything about the world of the infinitely small.

The fortuitous whims of the imagination

Within the process of the scientific method, there is still a stage that is not completely objective. This is what leads to conceptualising a theory, and Einstein, with his thought experiments, gives a famous example of it. He stated that “imagination is more important than knowledge”. Indeed, when looking at disparate observations, a physicist must imagine an underlying law. Sometimes, several theoretical models compete to explain a phenomenon, and it is only at this point that logic takes over again.

“The role of intelligence is not to discover, but to prepare. It is only good for service tasks.” (Simone Weil, “Gravity and Grace”)

In this way, the progress of ideas springs from what is called intuition. It is a sort of jump in knowledge that goes beyond pure rationality. The line between objective and subjective is no longer completely solid. Thoughts come from neurons under the effect of electromagnetic impulses, some of them being particularly fertile, as if there was a short circuit between cells, where chance is at work.

But these intuitions, or “flowers” of the human spirit, are not the same for everybody – Einstein’s brain produced “E=mc2”, whereas Proust’s brain came up with an admirable metaphor. Intuition pops up randomly, but this randomness is constrained by each individual’s experience, culture and knowledge.

The benefits of randomness

It should not come as shocking news that there is a reality not grasped by our own intelligence. Without randomness, we are guided by our instincts and habits, everything that makes us predictable. What we do is limited almost exclusively to this first layer of reality, with ordinary concerns and obligatory tasks. But there is another layer of reality, the one where obvious randomness is the trademark.

“Never will an administrative or academic effort replace the miracles of chance to which we owe great men.” (Honoré de Balzac, “Cousin Pons”)

Einstein is an example of an inventive and free spirit; yet he still kept his biases. His “first mistake” can be summed up saying: “I refuse to believe in a beginning of the universe.” However, experiments proved him wrong. His verdict on God playing dice means, “I refuse to believe in chance”. Yet quantum mechanics involves obligatory randomness. His sentence begs the question of whether he would believe in God in a world without chance, which would greatly curtail our freedom, as we would then be confined in absolute determinism. Einstein was stubborn in his refusal. For him, the human brain should be capable of knowing what the universe is. With a lot more modesty, Heisenberg teaches us that physics is limited to describing how nature reacts in given circumstances.

Quantum theory demonstrates that total understanding is not available to us. In return, it offers randomness which brings frustrations and dangers, but also benefits.

“Man can only escape the laws of this world for a flash of time. Instants of pausing, of contemplating, of pure intuition… It’s with these flashes that he is capable of the superhuman.” (Simone Weil, “Gravity and Grace”)

Einstein, a legendary physicist, is the perfect example of an imaginative being. His refusal of randomness is therefore a paradox, because randomness is what makes intuition possible allowing for creative processes in both science and art.

Translated from the French by Rosie Marsland for Fast ForWord.

Lost in space: MethaneSat failed just as NZ was to take over mission control – here’s what we need to know now

Lost in space: MethaneSat failed just as NZ was to take over mission control – here’s what we need to know now  FDA Lifts REMS Requirement for CAR-T Cell Cancer Therapies

FDA Lifts REMS Requirement for CAR-T Cell Cancer Therapies  NASA Resumes Cygnus XL Cargo Docking with Space Station After Software Fix

NASA Resumes Cygnus XL Cargo Docking with Space Station After Software Fix  Trump Signs Executive Order to Boost AI Research in Childhood Cancer

Trump Signs Executive Order to Boost AI Research in Childhood Cancer  Tabletop particle accelerator could transform medicine and materials science

Tabletop particle accelerator could transform medicine and materials science  Eli Lilly’s Inluriyo Gains FDA Approval for Advanced Breast Cancer Treatment

Eli Lilly’s Inluriyo Gains FDA Approval for Advanced Breast Cancer Treatment  Neuralink Plans High-Volume Brain Implant Production and Fully Automated Surgery by 2026

Neuralink Plans High-Volume Brain Implant Production and Fully Automated Surgery by 2026  NASA Astronauts Wilmore and Williams Recover After Boeing Starliner Delay

NASA Astronauts Wilmore and Williams Recover After Boeing Starliner Delay  SpaceX Starship Test Flight Reaches New Heights but Ends in Setback

SpaceX Starship Test Flight Reaches New Heights but Ends in Setback  CDC Vaccine Review Sparks Controversy Over Thimerosal Study Citation

CDC Vaccine Review Sparks Controversy Over Thimerosal Study Citation  Blue Origin’s New Glenn Achieves Breakthrough Success With First NASA Mission

Blue Origin’s New Glenn Achieves Breakthrough Success With First NASA Mission  Trump and Merck KGaA Partner to Slash IVF Drug Costs and Expand Fertility Coverage

Trump and Merck KGaA Partner to Slash IVF Drug Costs and Expand Fertility Coverage  SpaceX’s Starship Completes 11th Test Flight, Paving Way for Moon and Mars Missions

SpaceX’s Starship Completes 11th Test Flight, Paving Way for Moon and Mars Missions