As universities increasingly integrate artificial intelligence (AI) into their administrative and disciplinary processes, concerns over privacy, due process, and algorithmic bias are mounting. AI-driven surveillance tools now monitor student emails, internet activity, classroom interactions, and even predictive behavioral patterns, raising serious legal and ethical questions about the extent of institutional oversight. While proponents argue these technologies enhance security and prevent misconduct, critics warn they erode student rights, disproportionately target marginalized groups, and operate with minimal transparency or accountability.

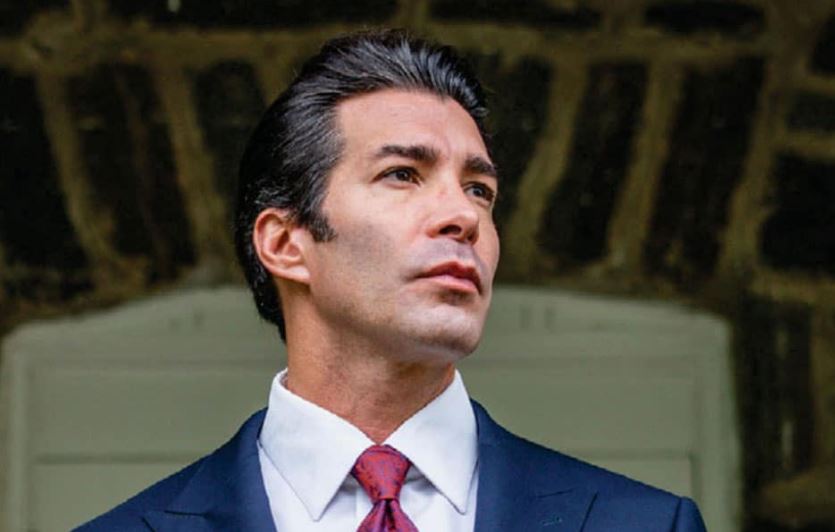

Joseph Lento, founder of Lento Law Firm, is representing students involved in disciplinary cases where AI-based investigations are playing a growing role. As these cases increase, Lento Law Firm is examining how these tools are being used against students and whether due process protections are keeping pace with technological advancements.

The Expanding Role of AI in Campus Oversight

AI surveillance in universities extends far beyond traditional security measures. Today, institutions rely on AI-powered tools to assess student behavior, flag potential risks, and even recommend disciplinary actions based on predictive analytics.

1. AI-Powered Student Monitoring Systems

-

Universities now use AI-driven monitoring software to track student emails, online behavior, and social media activity, often without explicit student consent.

-

Some schools deploy facial recognition and biometric tracking in classrooms, residence halls, and libraries to monitor attendance and enforce discipline.

-

Predictive analytics tools claim to identify students at risk of violating academic integrity policies before any infraction occurs.

2. AI in Academic Integrity Investigations

-

AI-powered plagiarism detection programs are increasingly used not just to identify copied work, but to flag potential AI-generated assignments—leading to wrongful accusations against students who may not have violated any policies.

-

Professors and disciplinary boards often treat AI-generated suspicion reports as conclusive evidence, even when the software’s accuracy remains scientifically unverified.

Lento states, “As an education attorney defending students in disciplinary proceedings, I have seen firsthand how AI surveillance can be misused to build cases against students without their knowledge or consent. Universities must ensure that monitoring practices respect student privacy and uphold due process rights, rather than relying on AI as a tool for preemptive punishment. These institutions also run the risk of falsely accusing students of academic integrity issues based solely on technology that has proven to demonstrate inaccuracies and biases against a variety of students.”

Due Process Concerns and Lack of Transparency

One of the most pressing issues surrounding AI in student discipline is the lack of due process protections. Many universities treat AI-generated reports as infallible, placing the burden on students to prove their innocence rather than requiring institutions to prove wrongdoing.

1. Limited Access to AI Algorithms and Evidence

-

AI-generated reports used in academic dishonesty cases are often proprietary and undisclosed, leaving students unable to challenge the findings or verify the methodology.

-

Some universities have denied student requests for access to the AI system’s decision-making process, citing “trade secrets” or “institutional security” concerns.

2. Preemptive Disciplinary Actions Based on Predictive AI

-

Some AI models categorize students as “high risk” for violations based on online activity, past academic performance, or behavior patterns.

-

Students have reported receiving disciplinary warnings for activities that had not yet occurred, solely because an AI model flagged them as likely offenders.

According to Lento, “From a legal standpoint, students should be given full access to the evidence being used against them, including the AI's methodology. Universities cannot shield AI systems from scrutiny while expecting students to defend themselves against unverifiable claims. Any disciplinary action based on predictive AI rather than actual misconduct is fundamentally flawed and legally questionable, certainly infringing upon a student’s Constitutionally provided due process rights.”

The Potential for Bias in AI Disciplinary Tools

Despite being marketed as objective and data-driven, AI disciplinary tools have been found to replicate human biases, disproportionately targeting students based on race, language patterns, and socioeconomic background.

1. Bias in AI-Powered Academic Misconduct Detection

-

Studies have shown that plagiarism detection tools are more likely to falsely flag non-native English speakers due to algorithmic bias in linguistic pattern recognition.

-

Predictive models trained on historical disciplinary records may reinforce past biases in enforcement, leading to disproportionate investigations into certain student groups.

2. Racial and Socioeconomic Disparities in AI Monitoring

-

AI surveillance tools used in student conduct enforcement have been found to flag Black and Latino students at higher rates, raising questions about institutional discrimination.

-

Low-income students who rely on shared devices or alternative internet access points may inadvertently trigger AI “anomalies,” resulting in unwarranted scrutiny.

Lento says, “AI bias in student discipline cases is not just a theoretical concern—it is a real issue that has already led to wrongful accusations and disproportionate penalties for marginalized students, particularly non-native English speakers and neurodivergent students. My team and I work to challenge these biased enforcement practices and ensure that students receive fair treatment under the law.”

The Legal Battles Ahead: Student Rights vs. Institutional AI Policies

With lawsuits already emerging against corporate AI discrimination, legal scholars anticipate that AI-driven student discipline cases will become a major area of litigation in the coming years.

1. Growing Legal Challenges to AI Surveillance

-

Universities are already facing lawsuits from students falsely accused of academic dishonesty due to faulty AI-generated plagiarism reports.

-

Some legal experts argue that universities’ use of AI to monitor student activity without consent violates privacy laws, including the Fourth Amendment and FERPA.

2. Calls for AI Oversight and Regulatory Reforms

-

Advocacy groups are pushing for clearer policies requiring institutions to disclose how AI systems are used in student discipline.

-

Legal experts warn that without stronger oversight, AI could be weaponized against students in ways that violate civil liberties.

Lento states, “Legal challenges to AI-based disciplinary actions will likely increase, as students begin to fight back against unfair treatment. Institutions must be held accountable for using AI responsibly, and students should not be subjected to disciplinary actions based on secretive, flawed, or discriminatory AI systems. This will require a transparent approach to using AI technology in misconduct detection and a commitment to ensuring students are provided with the full breadth of their due process rights to review and challenge evidence against them.”

Conclusion

As AI continues to shape student disciplinary systems, the legal and ethical concerns surrounding algorithmic decision-making, privacy violations, and due process failures demand urgent attention. The unchecked expansion of AI surveillance and predictive disciplinary tools raises serious constitutional questions, particularly as universities increasingly rely on opaque algorithms rather than human judgment.

For students facing unjust AI-generated disciplinary actions, legal advocacy will be critical in challenging these emerging threats to academic fairness.

Placeholder for Joseph Lento’s Perspective: Students who are unfairly accused due to AI-generated evidence should not accept disciplinary decisions without a fight. As an education attorney, I strongly advise students to seek legal representation early, demand transparency from their universities, and challenge any AI-based accusations that lack verifiable human oversight. Universities and schools that often pride themselves on breaking down barriers to education should be at the forefront of pushing for transparency and oversight.

Disclaimer and Disclosure:

This article is an opinion piece for informational purposes only. Econotimes and its affiliates do not take responsibility for the views expressed. Readers should conduct independent research to form their own opinions.

Nasdaq Proposes Fast-Track Rule to Accelerate Index Inclusion for Major New Listings

Nasdaq Proposes Fast-Track Rule to Accelerate Index Inclusion for Major New Listings  Global PC Makers Eye Chinese Memory Chip Suppliers Amid Ongoing Supply Crunch

Global PC Makers Eye Chinese Memory Chip Suppliers Amid Ongoing Supply Crunch  CK Hutchison Launches Arbitration After Panama Court Revokes Canal Port Licences

CK Hutchison Launches Arbitration After Panama Court Revokes Canal Port Licences  Once Upon a Farm Raises Nearly $198 Million in IPO, Valued at Over $724 Million

Once Upon a Farm Raises Nearly $198 Million in IPO, Valued at Over $724 Million  Toyota’s Surprise CEO Change Signals Strategic Shift Amid Global Auto Turmoil

Toyota’s Surprise CEO Change Signals Strategic Shift Amid Global Auto Turmoil  Washington Post Publisher Will Lewis Steps Down After Layoffs

Washington Post Publisher Will Lewis Steps Down After Layoffs  Nvidia CEO Jensen Huang Says AI Investment Boom Is Just Beginning as NVDA Shares Surge

Nvidia CEO Jensen Huang Says AI Investment Boom Is Just Beginning as NVDA Shares Surge  Rio Tinto Shares Hit Record High After Ending Glencore Merger Talks

Rio Tinto Shares Hit Record High After Ending Glencore Merger Talks  Uber Ordered to Pay $8.5 Million in Bellwether Sexual Assault Lawsuit

Uber Ordered to Pay $8.5 Million in Bellwether Sexual Assault Lawsuit  Instagram Outage Disrupts Thousands of U.S. Users

Instagram Outage Disrupts Thousands of U.S. Users  Tencent Shares Slide After WeChat Restricts YuanBao AI Promotional Links

Tencent Shares Slide After WeChat Restricts YuanBao AI Promotional Links  Ford and Geely Explore Strategic Manufacturing Partnership in Europe

Ford and Geely Explore Strategic Manufacturing Partnership in Europe  SpaceX Prioritizes Moon Mission Before Mars as Starship Development Accelerates

SpaceX Prioritizes Moon Mission Before Mars as Starship Development Accelerates  Prudential Financial Reports Higher Q4 Profit on Strong Underwriting and Investment Gains

Prudential Financial Reports Higher Q4 Profit on Strong Underwriting and Investment Gains  TrumpRx Website Launches to Offer Discounted Prescription Drugs for Cash-Paying Americans

TrumpRx Website Launches to Offer Discounted Prescription Drugs for Cash-Paying Americans  Weight-Loss Drug Ads Take Over the Super Bowl as Pharma Embraces Direct-to-Consumer Marketing

Weight-Loss Drug Ads Take Over the Super Bowl as Pharma Embraces Direct-to-Consumer Marketing  Baidu Approves $5 Billion Share Buyback and Plans First-Ever Dividend in 2026

Baidu Approves $5 Billion Share Buyback and Plans First-Ever Dividend in 2026