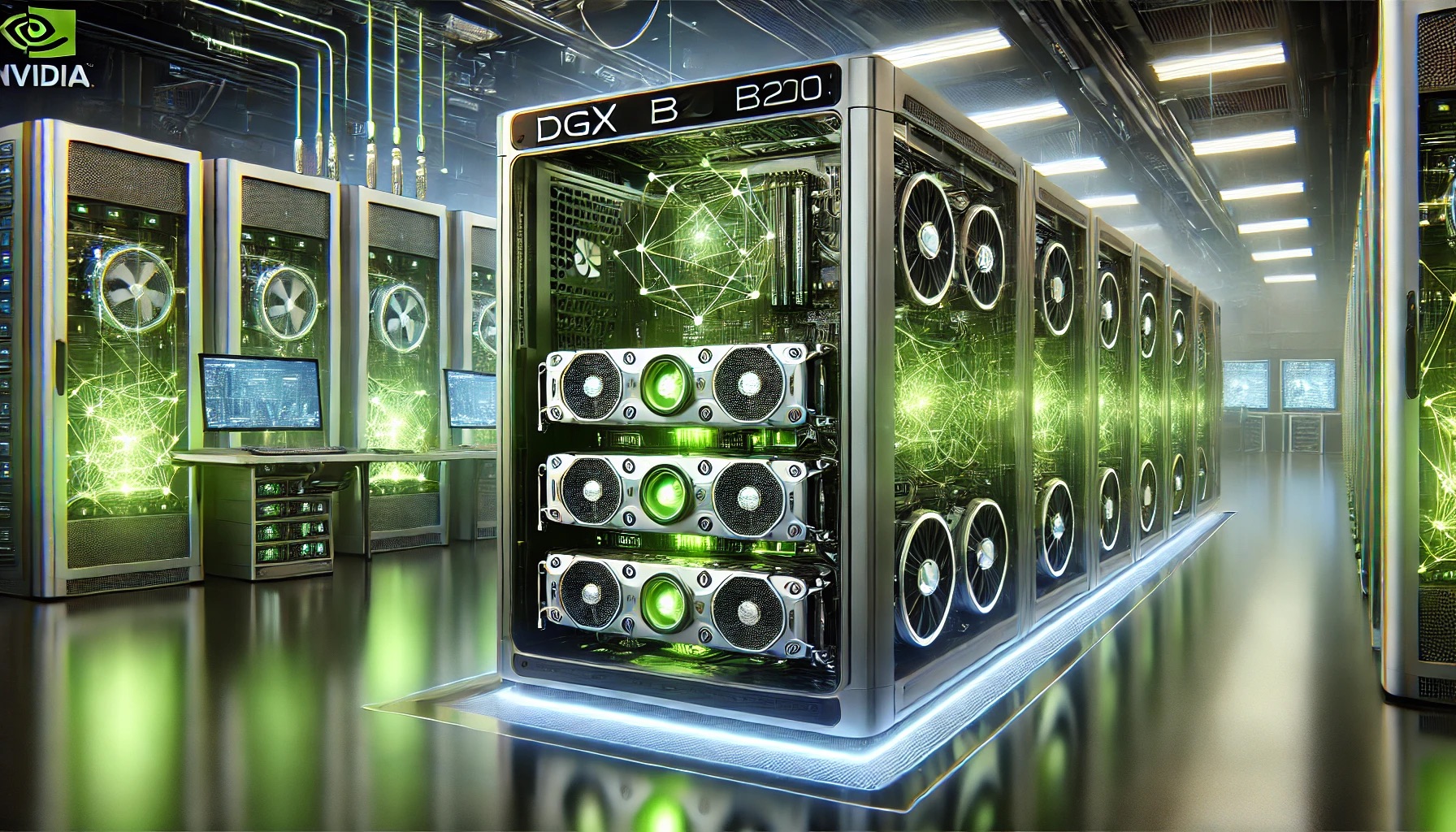

In a significant leap for artificial intelligence, OpenAI has obtained one of the first NVIDIA Blackwell DGX B200 systems. The cutting-edge GPUs are poised to accelerate the training and performance of OpenAI's advanced AI models.

OpenAI Boosts AI Power With Early NVIDIA DGX B200 System

B200 cards, which use NVIDIA's Blackwell architecture, are selling like hotcakes.

The B200 GPUs are NVIDIA's fastest data center GPUs to date, and orders for them have begun to roll in from a number of multinational corporations. According to NVIDIA, OpenAI was going to use the B200 GPUs. The company appears to be aiming to boost its AI computing capabilities by taking advantage of the B200's groundbreaking performance.

OpenAI Showcases NVIDIA's Blackwell System for AI Innovation

Earlier today, OpenAI's official X handle shared a photo of its staff with an early DGX B200 engineering sample. They are now ready to put the B200 to the test and train their formidable AI models now that the platform has arrived at their office.

The DGX B200 is an all-in-one AI platform that will make use of the forthcoming Blackwell B200 GPUs for training, fine-tuning, and inference. With a maximum HBM3E memory bandwidth of 64 TB/s and eight B200 GPUs per DGX B200, each unit may provide GPU memory of up to 1.4 TB.

Blackwell GPUs Power Major Industry Players' AI Ambitions

The DGX B200, according to NVIDIA, can provide remarkable performance for AI models, with training speeds of up to 72 petaFLOPS and inference speeds of up to 144 petaFLOPS.

Blackwell GPUs have long piqued the curiosity of OpenAI, and CEO Sam Altman even hinted about the possibility of employing them to train their AI models at one point.

Global Tech Giants Jump on the Blackwell Bandwagon

With so many industry heavyweights already opting to use Blackwell GPUs to train their AI models, the firm certainly won't be left out. Amazon, Google, Meta, Microsoft, Google, Tesla, xAI, and Dell Technologies are all part of this pack.

WCCFTECH has previously stated that, in addition to the 100,000 H100 GPUs now in use, xAI intends to use 50,000 B200 GPUs. Using the B200 GPUs, Foxconn has now also stated that it will construct the fastest supercomputer in Taiwan.

NVIDIA B200 Outshines Previous Generations in Power Efficiency

When compared to NVIDIA Hopper GPUs, Blackwell is both more powerful and more power efficient, making it an ideal choice for OpenAI's AI model training.

According to NVIDIA, the DGX B200 is capable of handling LLMs, chatbots, and recommender systems, and it boasts three times the training performance and fifteen times the inference performance of earlier generations.

SpaceX Reports $8 Billion Profit as IPO Plans and Starlink Growth Fuel Valuation Buzz

SpaceX Reports $8 Billion Profit as IPO Plans and Starlink Growth Fuel Valuation Buzz  Ford and Geely Explore Strategic Manufacturing Partnership in Europe

Ford and Geely Explore Strategic Manufacturing Partnership in Europe  Australian Scandium Project Backed by Richard Friedland Poised to Support U.S. Critical Minerals Stockpile

Australian Scandium Project Backed by Richard Friedland Poised to Support U.S. Critical Minerals Stockpile  Nasdaq Proposes Fast-Track Rule to Accelerate Index Inclusion for Major New Listings

Nasdaq Proposes Fast-Track Rule to Accelerate Index Inclusion for Major New Listings  Nintendo Shares Slide After Earnings Miss Raises Switch 2 Margin Concerns

Nintendo Shares Slide After Earnings Miss Raises Switch 2 Margin Concerns  FDA Targets Hims & Hers Over $49 Weight-Loss Pill, Raising Legal and Safety Concerns

FDA Targets Hims & Hers Over $49 Weight-Loss Pill, Raising Legal and Safety Concerns  Prudential Financial Reports Higher Q4 Profit on Strong Underwriting and Investment Gains

Prudential Financial Reports Higher Q4 Profit on Strong Underwriting and Investment Gains  Global PC Makers Eye Chinese Memory Chip Suppliers Amid Ongoing Supply Crunch

Global PC Makers Eye Chinese Memory Chip Suppliers Amid Ongoing Supply Crunch  Instagram Outage Disrupts Thousands of U.S. Users

Instagram Outage Disrupts Thousands of U.S. Users  Tencent Shares Slide After WeChat Restricts YuanBao AI Promotional Links

Tencent Shares Slide After WeChat Restricts YuanBao AI Promotional Links  Nvidia Nears $20 Billion OpenAI Investment as AI Funding Race Intensifies

Nvidia Nears $20 Billion OpenAI Investment as AI Funding Race Intensifies  Sony Q3 Profit Jumps on Gaming and Image Sensors, Full-Year Outlook Raised

Sony Q3 Profit Jumps on Gaming and Image Sensors, Full-Year Outlook Raised  Google Cloud and Liberty Global Forge Strategic AI Partnership to Transform European Telecom Services

Google Cloud and Liberty Global Forge Strategic AI Partnership to Transform European Telecom Services  Sam Altman Reaffirms OpenAI’s Long-Term Commitment to NVIDIA Amid Chip Report

Sam Altman Reaffirms OpenAI’s Long-Term Commitment to NVIDIA Amid Chip Report  Toyota’s Surprise CEO Change Signals Strategic Shift Amid Global Auto Turmoil

Toyota’s Surprise CEO Change Signals Strategic Shift Amid Global Auto Turmoil  AMD Shares Slide Despite Earnings Beat as Cautious Revenue Outlook Weighs on Stock

AMD Shares Slide Despite Earnings Beat as Cautious Revenue Outlook Weighs on Stock  Elon Musk’s SpaceX Acquires xAI in Historic Deal Uniting Space and Artificial Intelligence

Elon Musk’s SpaceX Acquires xAI in Historic Deal Uniting Space and Artificial Intelligence