NVIDIA Corporation (NASDAQ: NVDA) used the CES 2026 convention in Las Vegas to reaffirm its leadership in artificial intelligence infrastructure, announcing that its next-generation Rubin data center platform is now in full production and on track for release later this year. The move highlights Nvidia’s accelerated release cycle as competition intensifies from rivals such as Advanced Micro Devices (NASDAQ: AMD) and custom silicon developed by major cloud providers.

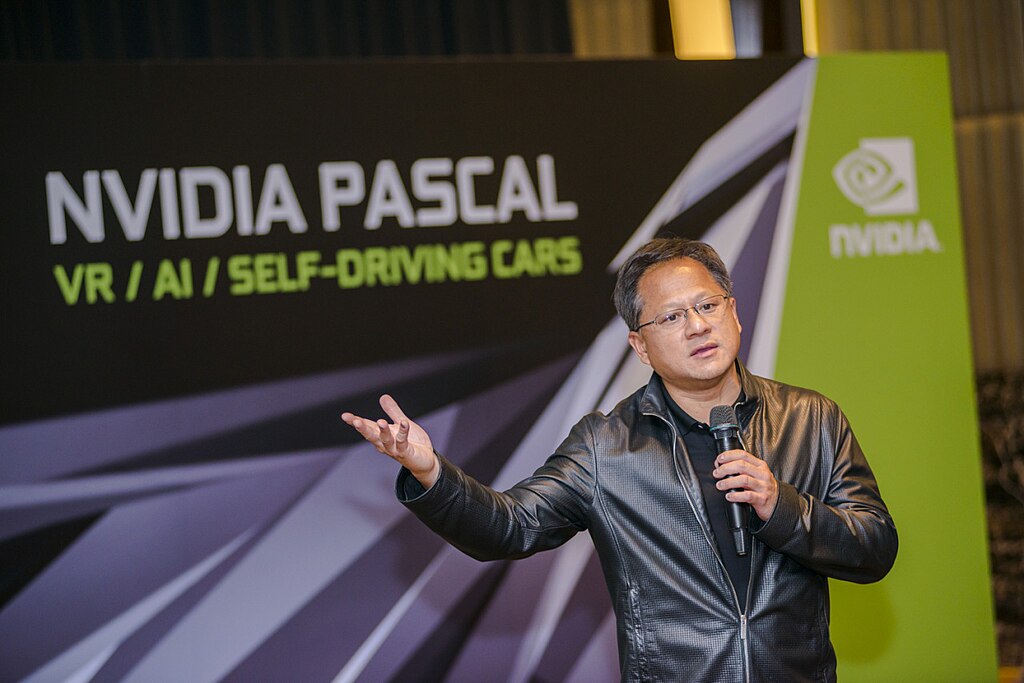

During his keynote address, CEO Jensen Huang revealed that all six chips in the Rubin platform have successfully returned from manufacturing partners and passed initial milestone tests. This puts the new AI accelerator systems on schedule for customer deployments in the second half of 2026. By unveiling Rubin early, Nvidia is signaling confidence in its roadmap while keeping enterprises closely aligned with its hardware ecosystem.

The Rubin GPU is designed to meet the growing demands of agentic AI models, which rely on multistep reasoning rather than simple pattern recognition. According to Nvidia, Rubin delivers 3.5 times faster AI training performance and up to 5 times higher inference performance compared to the current Blackwell architecture. The platform also introduces the new Vera CPU, featuring 88 custom cores and offering double the performance of its predecessor. Nvidia says Rubin-based systems can achieve the same results as Blackwell while using far fewer components, reducing cost per token by as much as tenfold.

Positioned as a modular “AI factory” or “supercomputer in a box,” the Rubin platform integrates the BlueField-4 DPU, which manages AI-native storage and long-term context memory. This design improves power efficiency by up to five times, a critical factor for hyperscale data centers. Early adopters include Microsoft (NASDAQ: MSFT), Amazon AWS (NASDAQ: AMZN), Google Cloud (NASDAQ: GOOGL), and Oracle Cloud Infrastructure (NYSE: ORCL).

Beyond data centers, Nvidia also highlighted major advances in robotics and autonomous vehicles, calling the current period a “ChatGPT moment” for physical AI. New offerings such as Alpamayo AI models for self-driving systems and the Jetson T4000 robotics module further underscore Nvidia’s bet that reasoning-based AI will drive a massive, trillion-dollar infrastructure upgrade across industries.

SoftBank Shares Slide After Arm Earnings Miss Fuels Tech Stock Sell-Off

SoftBank Shares Slide After Arm Earnings Miss Fuels Tech Stock Sell-Off  TSMC Eyes 3nm Chip Production in Japan with $17 Billion Kumamoto Investment

TSMC Eyes 3nm Chip Production in Japan with $17 Billion Kumamoto Investment  Instagram Outage Disrupts Thousands of U.S. Users

Instagram Outage Disrupts Thousands of U.S. Users  SpaceX Reports $8 Billion Profit as IPO Plans and Starlink Growth Fuel Valuation Buzz

SpaceX Reports $8 Billion Profit as IPO Plans and Starlink Growth Fuel Valuation Buzz  Sony Q3 Profit Jumps on Gaming and Image Sensors, Full-Year Outlook Raised

Sony Q3 Profit Jumps on Gaming and Image Sensors, Full-Year Outlook Raised  Missouri Judge Dismisses Lawsuit Challenging Starbucks’ Diversity and Inclusion Policies

Missouri Judge Dismisses Lawsuit Challenging Starbucks’ Diversity and Inclusion Policies  Anthropic Eyes $350 Billion Valuation as AI Funding and Share Sale Accelerate

Anthropic Eyes $350 Billion Valuation as AI Funding and Share Sale Accelerate  Global PC Makers Eye Chinese Memory Chip Suppliers Amid Ongoing Supply Crunch

Global PC Makers Eye Chinese Memory Chip Suppliers Amid Ongoing Supply Crunch  Sam Altman Reaffirms OpenAI’s Long-Term Commitment to NVIDIA Amid Chip Report

Sam Altman Reaffirms OpenAI’s Long-Term Commitment to NVIDIA Amid Chip Report  TrumpRx Website Launches to Offer Discounted Prescription Drugs for Cash-Paying Americans

TrumpRx Website Launches to Offer Discounted Prescription Drugs for Cash-Paying Americans  CK Hutchison Launches Arbitration After Panama Court Revokes Canal Port Licences

CK Hutchison Launches Arbitration After Panama Court Revokes Canal Port Licences  Tencent Shares Slide After WeChat Restricts YuanBao AI Promotional Links

Tencent Shares Slide After WeChat Restricts YuanBao AI Promotional Links  Nvidia, ByteDance, and the U.S.-China AI Chip Standoff Over H200 Exports

Nvidia, ByteDance, and the U.S.-China AI Chip Standoff Over H200 Exports  SpaceX Updates Starlink Privacy Policy to Allow AI Training as xAI Merger Talks and IPO Loom

SpaceX Updates Starlink Privacy Policy to Allow AI Training as xAI Merger Talks and IPO Loom  Uber Ordered to Pay $8.5 Million in Bellwether Sexual Assault Lawsuit

Uber Ordered to Pay $8.5 Million in Bellwether Sexual Assault Lawsuit  Jensen Huang Urges Taiwan Suppliers to Boost AI Chip Production Amid Surging Demand

Jensen Huang Urges Taiwan Suppliers to Boost AI Chip Production Amid Surging Demand